Summary: Feature Engineering in Machine Learning transforms raw data into meaningful features to boost model performance. This process involves cleaning, transforming, selecting, extracting, and iterating data. Using techniques like handling missing values and encoding enhances accuracy and efficiency. Advanced tools further streamline the creation of robust predictive models for success.

Introduction

Feature Engineering in Machine Learning helps improve how models understand and process data. It involves selecting, transforming, or creating new features to make predictions more accurate. Without proper Feature Engineering, even the best algorithms may not perform well.

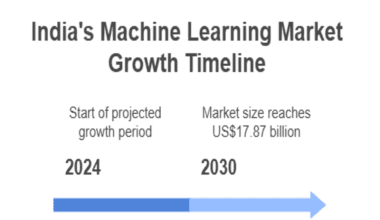

Machine Learning is growing rapidly. In 2021, the global market was worth $15.44 billion. Experts predict it will reach $209.91 billion by 2029, growing at an impressive 38.8% per year. This blog will explain Feature Engineering, why it matters, and how it helps build better Machine Learning models—even if you’re new to the topic.

Key Takeaways

- Feature Engineering in Machine Learning improves model accuracy by converting raw data into meaningful features.

- It involves data cleaning, transformation, selection, extraction, and iteration.

- Effective Feature Engineering reduces errors, speeds up learning, and prevents overfitting.

- Handling missing values, encoding, scaling, and interaction creation optimise data quality.

- Tools like Featuretools, AutoFeat, and TPOT simplify the workflow for better predictions.

What is Feature Engineering?

A feature is information that helps a Machine Learning model make decisions. Think of it like ingredients in a recipe—each one contributes to the final dish.

For example, if a model predicts house prices, features could be the number of bedrooms, location, and house size. Similarly, in a spam detection system, features might include the presence of certain words or the length of an email.

Features act like clues that guide the model in making accurate predictions. Good features improve accuracy, while poor features can mislead the model. Selecting the right features is essential for better results.

Benefits of Feature Engineering

Feature engineering helps Machine Learning models make better predictions by improving data quality. It ensures that the model focuses on the most useful information, leading to more accurate and reliable results. Here are some key benefits:

- Improves Accuracy: Well-designed features help the model understand patterns better, leading to better predictions.

- Reduces Errors: Feature Engineering helps the model avoid mistakes by cleaning and organising data.

- Speeds Up Learning: The model learns faster when it works with meaningful data.

- Handles Complex Data: Feature engineering simplifies raw data, making it easier for the model to process.

Steps of Feature Engineering

While different Data Scientists may follow different approaches, most Feature Engineering workflows include a few common steps. These steps help clean the data, enhance its quality, and make it more suitable for the model to understand patterns and make accurate predictions.

Data Cleaning

Before selecting or modifying features, it is crucial to clean the dataset. Data cleaning, also known as data cleansing or scrubbing, involves identifying and fixing errors, missing values, and inconsistencies. This ensures the dataset is accurate, complete, and free from biases that could negatively impact the model’s performance.

Data Transformation

Data transformation is converting raw data into a more structured and usable format. This can involve:

- Handling missing values by filling in gaps or removing incomplete records.

- Converting data types (e.g., changing text-based dates into numerical formats).

- Standardising values to ensure consistency across different sources.

- Aggregating data to summarise information in a meaningful way.

Transforming data makes it easier to analyse and ensures Machine Learning models can interpret it correctly.

Feature Selection

Feature selection is choosing the most relevant features from the dataset while removing those that add little or no value. Selecting the right features can improve model efficiency and prevent overfitting. Techniques such as correlation analysis, mutual information, and statistical tests help identify the most useful features of the model.

Feature Extraction

Feature extraction involves creating new features from the existing data to highlight important patterns. This is useful when raw data is too complex or unstructured.

For example, in text analysis, a paragraph of text can be transformed into numerical values using techniques like word embeddings or TF-IDF. In image recognition, pixel data can be converted into meaningful shape and texture descriptors.

Feature Transformation

Feature transformation involves modifying features to make them more beneficial for the model. This can include:

- Scaling and normalisation: Adjusting values so they are within a specific range.

- Encoding categorical data: Converting text-based categories into numerical values.

- Polynomial transformations: Creating new features by combining existing ones in different ways.

Feature Iteration

After initial feature selection and transformation, the features must be tested and refined. Feature iteration involves experimenting with different feature combinations, adding new features, or removing redundant ones based on the model’s performance. This step is crucial for improving accuracy and ensuring the model generalises well to new data.

Common Feature Engineering Techniques

Well-crafted features help models understand patterns better and make more accurate predictions. Below are some common techniques used in Feature Engineering.

Handling Missing Values

Missing data can cause issues in Machine Learning models, leading to inaccurate predictions. There are several ways to handle missing values:

- Removing Missing Values: If only a few data points have missing values, removing them might be a simple solution. However, this is not ideal if too much data is lost.

- Filling with Mean, Median, or Mode: Replacing missing values with the mean or median ensures consistency for numerical data. The mode (most common value) can be used for categorical data.

- Using Predictive Methods: In some cases, advanced techniques like regression or Machine Learning models can predict missing values based on other features.

Encoding Categorical Variables

Machine Learning models use numerical data, so categorical variables must be converted into numbers. Here are some techniques:

- One-Hot Encoding: This method creates a new column for each category, indicating whether a data point belongs to that category with a 1 or 0. For example, a “Color” variable with values Red, Green, and Blue becomes three new columns: Color_Red, Color_Green, and Color_Blue, where each column contains 1 or 0 based on the colour.

- Label Encoding: This technique assigns a unique number to each category. For example, the categories Red, Green, and Blue may be assigned values 0, 1, and 2, respectively. This method is simple but may introduce a false numerical relationship between categories.

- Binning: This technique converts continuous values into categories by grouping similar values. For example, an “Age” variable ranging from 18 to 80 can be grouped into bins like 18-25, 26-35, 36-50, and 51-80, making it easier for models to understand.

Scaling and Normalisation

Scaling ensures that all numerical features have similar ranges, preventing large values from dominating smaller ones. Common techniques include:

- Standardisation: This method transforms data with a mean of 0 and a standard deviation of 1. It helps when data follows a normal distribution.

- Normalisation: This technique scales values between 0 and 1, making it useful when features have different units or scales.

Creating Interaction Features

Sometimes, relationships between features can improve model performance. Interaction features are created by combining existing features in meaningful ways:

- Feature Splitting: Breaking down a feature into multiple sub-features can uncover hidden patterns. For example, a “Date” feature can be split into “Year,” “Month,” and “Day.”

- Text Data Preprocessing: Text data needs special processing before being used in Machine Learning models. This includes:

- Removing Stop Words: Words like “the” and “and” add little value to the analysis.

- Stemming and Lemmatisation: These techniques reduce words to their root forms. For example, “running” becomes “run.”

- Vectorisation: Text data is converted into numerical values using techniques like TF-IDF or word embeddings so models can process it.

These Feature Engineering techniques help make data more meaningful and improve Machine Learning model performance.

Feature Engineering Tools

Feature engineering transforms raw data into valuable features that help Machine Learning models learn and predict better. Many tools simplify this process, allowing beginners to generate and select useful features with minimal manual effort.

Featuretools

Featuretools is a Python library that extracts new features automatically from structured data. It works with CSV files, databases, and multiple data tables. It uses user-defined operations and Machine Learning methods to generate features, supports time-based data, and integrates well with pandas and scikit-learn. Its built-in visualisation tools and clear tutorials help users explore the features that have been created.

AutoFeat

AutoFeat builds linear prediction models by automatically selecting and engineering features. It handles categorical data through one-hot encoding and prevents the creation of unrealistic features. Its models work similarly to scikit-learn tools, making it a practical choice for logistical data tasks.

TsFresh

TsFresh automatically calculates many characteristics from time series data. It extracts values like peaks, averages, and maximums, and then checks which features best explain the patterns in the data. This tool is especially useful for users dealing with time-based information.

ExploreKit

ExploreKit transforms basic features into more informative ones by applying common operations. Instead of testing every possibility, it uses meta learning to rank promising features, saving time and effort.

TPOT

TPOT uses genetic programming to search for the best combinations of features and Machine Learning pipelines. It handles missing values and categorical data while supporting regression, classification, and clustering models.

DataRobot

DataRobot automates the entire Machine Learning process, including Feature Engineering for time-dependent and text data. It integrates with popular Python libraries and offers interactive visualisations.

Alteryx

Alteryx provides a visual interface to extract, transform, and generate features from structured and unstructured data, making it easy to build data pipelines.

OneBM

OneBM directly interacts with database tables. It joins data from various sources and applies pre-defined methods to generate simple and complex features.

H2O.ai

H2O.ai offers both automatic and manual Feature Engineering options. It supports various data types and integrates seamlessly with CSV files, databases, and other tools, allowing users to visualise and refine their models.

Challenges in Feature Engineering

Feature engineering faces several challenges affecting Machine Learning models’ accuracy and speed. It requires careful planning and a good understanding of data. Developers must overcome obstacles to make models work well. Below are common challenges:

- Overfitting risks: Models may learn too much from training data and fail to perform well on new data.

- Curse of dimensionality: Too many features can make models confusing and slow.

- Computational complexity: Processing large amounts of data takes more time and computer power.

These challenges require smart strategies and proper tools to overcome. Solving these problems helps build better, more reliable models.

Concluding Thoughts

Feature engineering in Machine Learning proves essential for building robust predictive models. It transforms raw data into actionable insights, ensuring models understand underlying patterns effectively. This process involves data cleaning, transformation, selection, extraction, and iteration, each step driving higher accuracy and reliability.

Data Scientists can optimise performance and reduce errors by handling missing values and encoding categorical variables. Integrating powerful tools simplifies the process and addresses challenges like overfitting and dimensionality.

Embracing Feature Engineering unlocks potential, elevating Machine Learning outcomes. Now, companies and researchers have refined these practices. Those interested in learning Machine Learning can explore free Data Science courses by Pickl.AI.

The institution offers hands-on training in feature engineering and other essential concepts. With the right knowledge and tools, anyone can refine data and build high-performing Machine Learning models.

Frequently Asked Questions

What is Feature Engineering in Machine Learning?

Feature Engineering in Machine Learning transforms raw data into informative features that improve model accuracy. It involves cleaning, transforming, selecting, extracting, and iterating data to highlight patterns and reduce noise, enabling algorithms to make more accurate predictions and successfully enhance overall performance in various applications.

Why is Feature Engineering Essential in Machine Learning?

Feature Engineering in Machine Learning is crucial because it improves data quality and model performance. Data Scientists reduce noise, prevent overfitting, and enhance prediction accuracy by selecting and transforming the most relevant features. This process simplifies complex data, making it easier for algorithms to learn and produce reliable, insightful results.

What are Common Techniques Used in Feature Engineering in Machine Learning?

Common techniques in Feature Engineering in Machine Learning include handling missing values, encoding categorical variables, scaling, normalisation, and feature extraction. Data Scientists create interaction features, perform feature selection, and apply transformation methods like polynomial expansion. These techniques streamline data processing and help models capture essential patterns for improved prediction performance.