DEFINING DATA CLEANING:

Data cleaning is considered one of the most important steps in machine learning. It is also called data scrubbing or data cleansing and is a part of the data pre-processing technique. Data preprocessing is a technique that is used to convert raw and unstructured data into clean and structured data. The unstructured data consists of missing values, noisy data (meaningless data), outliers, etc., which affects the accuracy of the model and gives incorrect predictions.

We get huge amounts of data from multiple resources. It is also said that most data scientists spend their time on data cleaning. Assume that you are a data scientist at Amazon and want to increase your company’s sales. You need to analyze your customer’s data. So, you have collected all the data of your customers. But what if the collected data is corrupted and irrelevant? Then you might end up with a loss. So, data cleaning is an essential step that needs to be performed before training any machine learning model.

CHARACTERISTICS OF QUALITY DATA

Poor quality of the data results in improper decision-making, inaccurate predictions, reduced productivity, etc. Therefore, the quality of the data needs to be assessed to improve the performance of the ML model. Utilizing the effectiveness and the use of the data can tremendously increase the reliability and value of the brand. Hence, businesses have started to give more importance to data quality.

Let’s discuss some of the characteristics of data quality.

Accuracy

Data accuracy assures whether the values of the data stored for an object are correct or not. A dataset needs values that are both consistent and ambiguous.

Consistency

There will be a chance of losing certain values and quality of the data before importing the dataset into the code. Therefore, we have to ensure that there is uniformity in the data, i.e., the consistency of the data must be maintained.

Unique Nature

Data collection should be as precise as possible because failure to do so could lead to inaccurate and erroneous conclusions. Data manipulation and summarization produce a different interpretation of the data than the one that is implied at a lower level.

Validity

Data validity is the accuracy and precision of the findings made from the collected data. Valid data enables appropriate and accurate inferences to be made from the sample that can be applied to the complete population.

To have a legitimate data set, you must avoid the following:

- Insufficient data

- Excessive data variance

- Incorrect sample selection.

- Use of an improper measurement method for analysis

Relevance & completeness

Data must be gathered for a reason that justifies the effort involved, which also means that it must be done at the appropriate time. Data that is gathered too soon or too late may be erroneous and lead to bad conclusions.

Completeness indicates whether the dataset contains relevant information on the current and upcoming needs of the organization.

DATA CLEANING STEPS

Data Cleaning is carried out through several steps.

- Removal of unwanted observations

- Fixing Structural Errors

- Managing unwanted Outliers

- Handling Missing Data

- Handling Noisy Data

- Validate & QA

Let us discuss them in detail!

Removal of unwanted observations:

The first and foremost step in data cleaning is to remove the unnecessary observations i.e., duplicate or irrelevant observations from your dataset.

Duplicate observations must be removed because we don’t want them while training our model as they give inaccurate results. These observations occur when collecting and combining the data from multiple resources, receiving the data from clients or other departments, etc.

Irrelevant Observations are those that are not at all related to our problem statement. For example, if you are building the model to predict only the price of the house, then you don’t require the observations of the people living there. So, removing these observations will increase your model’s accuracy.

Fixing structural errors

Structural errors are those that have the same meaning but appear in different categories. Examples of these errors include typos (misspelled words), incorrect capitalization, etc. These errors occur mostly with the categorical data. For example, Capital and capital refer to the same meaning but are recorded as two different classes in the dataset. The other example of structural errors is NaN and None values in the dataset. Both NaN and None represent that certain values of the features are missing. These errors should be identified and replaced with the appropriate ones.

Managing unwanted outliers

An outlier is a value that is far from or irrelevant to our analysis. Depending on the model type, outliers can be problematic. For instance, when compared to decision tree models, linear regression models are less robust to outliers. It happens frequently that you will come across one-off observations that, at first glance, they do not seem to suit the data you are examining. Removing the outlier will improve the performance of the data you are working with if you have a good cause to do so, such as incorrect data entry. The appearance of an outlier, on the other hand, can occasionally support a theory you’re working on. Keeping this in mind, an outlier does not necessarily indicate that something is wrong. To evaluate the reliability, this step is required. Consider deleting an outlier if it appears to be incorrect or irrelevant for the analysis.

Example of an outlier,

Suppose we have a set of numbers as

{3,4,7,12,20,25,95}

In the above set of numbers, 95 is considered the outlier because it is very far from other numbers in the given set.

Handling Missing data

We cannot ignore missing data as most of the algorithms do not work well with the data that have missing values. Missing values are represented by Nan, None, or NA. There are a few ways to handle missing values.

- Dropping Missing Values

- Imputing Missing Values

Dropping Missing Values:

Dropping observations results in the loss of information, therefore dropping missing values is not an ideal solution. The absence of the value itself may have informational value. However, in the real world, it’s necessary to frequently predict the solutions based on new data, even when some features are absent. So, before dropping the values, be very careful that you do not lose valuable information. This approach is used when the dataset is quite large and multiple values are missing in the dataset.

Imputing Missing Values:

Imputation is a method used for retaining the majority of the data and information in a dataset by substituting missing data with another value. No matter how advanced your imputation process is, this might also result in a loss of information. Even if you develop an imputation model, all you are doing is enhancing the patterns that other features have already provided.

We have two different types of data i.e., categorical data and numerical data. The missing categorical data can be mostly handled with the help of a central tendency measure, mode. Whereas missing numerical data can be handled with the help of central tendency measures, mean and median.

Handling Noisy Data

Noisy data is meaningless data that can’t be interpreted by machines. It can be generated due to faulty data collection, data entry errors, etc. It can be handled in the following ways :

- Binning Method:

This method works on sorted data to smooth it. The whole data is divided into segments of equal size and then various methods are performed to complete the task. Each segment is handled separately. One can replace all data in a segment by its mean or boundary values can be used to complete the task.

- Regression:

Here data can be made smooth by fitting it to a regression function. The regression used may be linear (having one independent variable) or multiple (having multiple independent variables).

- Clustering:

This approach groups similar data in a cluster. The outliers may be undetected or they will fall outside the clusters.

Validate and QA

At the end of the data cleaning process, you must ensure that the following questions are answered or not.

- Does the data follow all the requirements for its field?

- Does the data appear to be meaningful?

- Does it support or contradict your working theory? Does it offer any new information/insights?

- Can you identify patterns in the data that will help you develop your next theory? If not, is there a problem with the quality of the data?

The above steps are considered the best practices for data cleaning. Below is the overview of the steps performed by data cleaning.

Image Source – https://www.geeksforgeeks.org/data-cleansing-introduction/

However, data cleaning is a very time-consuming process, but still it is important. Why?

Let us see why it is important in machine learning or data science!

IMPORTANCE OF DATA CLEANING

Data Cleansing plays a vital role in data science and machine learning. It is the initial step in data pre-processing. Data cleaning helps in increasing the model’s accuracy as it deals with missing values, irrelevant data, incomplete data, etc. Almost all organizations depend on the data for most things, but only a few will be successful in analyzing the quality of the data. It helps in reducing errors in the data and improves the quality of the data. As we have seen in the above context, data cleaning handles missing values, irrelevant data, and noisy data.

Data Cleaning helps in considering the missing values and their impact on our model. It also helps in achieving the higher and better accuracy of the model. It also helps in achieving data consistency. For example, a company is shortlisting graduates for a particular job role. But, the dataset contains one of the values for the feature age as 17 which is not possible and is incorrect.

It also makes visualization easy as the dataset becomes clear and meaningful after performing data cleansing.

LIFE CYCLE OF ETL IN DATA CLEANING

Before starting with ETL, let’s understand the data warehouse. A data warehouse is used to store the data from various sources and extracts the data to provide meaningful insights from it. ETL stands for Extract, Transform and Load which is a data integration process that is used to combine the data collected from multiple sources into a single source and puts that data in a data warehouse.

The main purpose of the ETL is to,

- Extract the data from the various systems.

- Transform the raw data into clean data to ensure data quality and consistency. This is the step where data cleaning is performed.

- Finally, load the cleaned data into the data warehouse or any other targeted database.

The steps that are performed by ETL are shown in the below diagram for better understanding.

TOOLS & TECHNIQUES FOR DATA CLEANING

Tasks involving data cleaning can be automated using a variety of techniques, including free source and proprietary software. The tools typically come with a number of features for resolving data mistakes and problems, including the ability to combine duplicate entries and add missing values or replace null ones. To locate duplicate or similar records, many people also use data matching.

There are numerous products and systems that offer data cleaning tools that are mentioned below,

- specialized data cleaning tools from vendors such as Data Ladder and WinPure;

- data quality software from vendors such as Datactics, Experian, Innovative Systems, Melissa, Microsoft, and Precisely;

- data preparation tools from vendors such as Altair, DataRobot, Tableau, Tibco Software, and Trifacta;

- customer and contact data management software from vendors such as Redpoint Global, RingLead, Synthio, and Tye;

- tools for cleansing data in Salesforce systems from vendors such as Cloudingo and Plauti; and

- open source tools, such as DataCleaner and OpenRefine

Pandas is the best library or tool that is very flexible for data cleaning. We have different functions in Pandas to clean the data. We will see an example of how pandas are used in data cleaning.

DATA CLEANING IN ML USING PANDAS

Pandas is a python library that is mostly used for data cleaning and data analysis in data science and machine learning. Let us perform data cleaning using pandas.

Here, we are using the dataset containing historical and projected rainfall and runoff for 4 Lake Victoria Sub-Regions. This dataset is provided by Open Africa and is released under the Creative Commons License.

The dataset has only 14 rows and 4 columns. But, it is completely messy. So, it is better suitable to understand data cleaning.

Import the Pandas library

Download the dataset and import it into your code. As the dataset is in excel format, I’m using the read_excel() function.

Print the first 5 rows of the dataset

Next, print the dimensions of the dataset for knowing the number of rows and columns present in the dataset.

In the above output, we can see that the dataset is not loaded properly. There are some irrelevant columns. So, just skip the last two rows and display the dataset.

We can still see some additional columns. Those columns need to be removed.

Now we can see that there are no irrelevant columns. We have to check whether there are any missing values in the dataset or not.

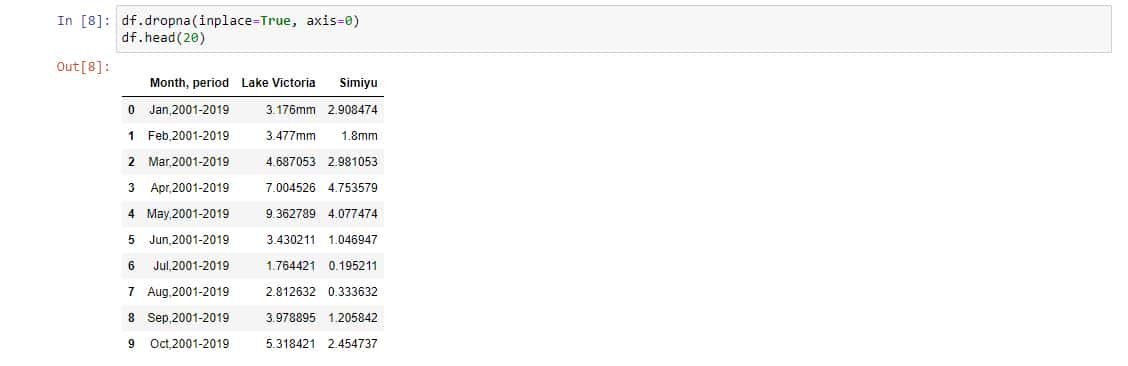

We can see that there are missing values in the last two rows. Remove them.

Split the column named Month, period as two separate columns for a clear understanding

Now add the above two columns with different names in the original data frame

Now, drop the feature named Month, and period as it is not required.

Some columns contain the string mm, so define a function, which eliminates it.

Now apply the previous function to the columns Lake Victoria and Simiyu:

Next, describe the type of each column

The Lake Victoria and Simiyu columns should be float because they contain floating point numbers. So convert them to float:

We have dealt with the noisy data, missing data, and irrelevant data in the above code. Try to use another uncleaned dataset and implement data cleaning.

BENEFITS OF DATA CLEANING

There are many uses of data cleansing. Let’s have a look at them!

Image Source – https://www.analyticsvidhya.com/blog/2021/06/data-cleaning-using-pandas/

- It helps in removing errors and achieving better insights from the data.

- The Decision-making process becomes easier.

- Increases the performance and accuracy of the model.

- If the data is cleaned and up-to-date, then that helps the organization to improve productivity and the quality of the work done by the employees.

- It helps in data integrity, and data consistency and also gives better accuracy to the model.

CONCLUSION

Data Cleaning is very important for any machine learning model. Even though it is a time-consuming process, it is essential as it gives relevant and accurate predictions.

Most ML projects require spending almost 80% of the time on data cleaning. So, whenever you are working on an ML project, make sure to clean the data before proceeding further.