Summary: This blog explores the key differences between ETL and ELT, detailing their processes, advantages, and disadvantages. Understanding these methods helps organizations optimize their data workflows for better decision-making.

Introduction

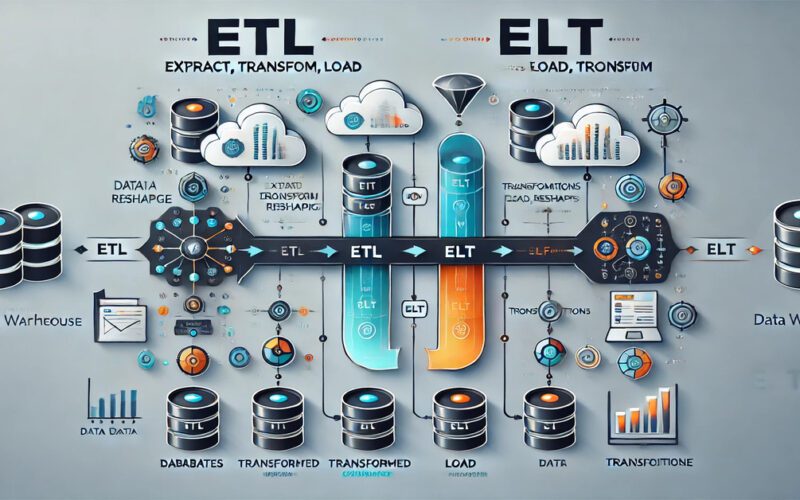

In today’s data-driven world, efficient data processing is crucial for informed decision-making and business growth. This blog explores the fundamental concepts of ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform), two pivotal methods in modern data architectures.

Understanding the differences between ETL and ELT is essential for organisations to optimise their data workflows and enhance performance. This blog’s objectives are to clarify the processes involved in ETL and ELT, highlight their respective advantages and disadvantages, and guide you in selecting the most suitable approach for your data management needs.

What is ETL?

ETL stands for Extract, Transform, and Load. It is a crucial data integration process that involves moving data from multiple sources into a destination system, typically a data warehouse. This process enables organisations to consolidate their data for analysis and reporting, facilitating better decision-making.

ETL is a data integration framework that helps organisations gather, clean, and store data from diverse sources in a centralised repository. By employing ETL, businesses ensure that their data is reliable, accurate, and ready for analysis. This process is essential in environments where data originates from various systems, such as databases, applications, and web services.

Detailed Explanation of Each Component

Each component plays a significant role in ensuring data’s successful movement and transformation in the ETL process. Understanding these components helps organisations optimise their data integration efforts.

Extract

The first step in the ETL process is Extract, where data is collected from various sources. This initial phase is critical because it sets the foundation for the quality of the entire ETL process. The quality and relevance of the extracted data directly influence the success of the subsequent steps.

These sources include relational databases, flat files, APIs, cloud storage, and web scraping. During this phase, the ETL tool retrieves the raw data, which can be structured or unstructured. The key is to ensure that all relevant data is captured for further processing.

Transform

Once the data is extracted, the next step is Transform. This phase is crucial for enhancing data quality and preparing it for analysis. Transformation involves various activities that help convert raw data into a format suitable for reporting and analytics.

Data transformation may include several activities, such as:

- Data Cleaning: Removing duplicates, correcting errors, and filling in missing values to enhance data quality.

- Normalisation: Standardising data formats and structures, ensuring consistency across various data sources.

- Aggregation: Summarising or consolidating data to derive meaningful insights, such as calculating totals or averages.

The transformation phase is essential. It ensures the data is accurate and reliable, leading to better decision-making.

Load

The final component of ETL is Load. In this phase, the transformed data is inserted into the destination system, typically a data warehouse. This step is crucial because it makes the cleaned and organised data available for analysis, reporting, and decision-making.

The load process can vary depending on the organisation’s needs. It can occur in bulk, where large batches of data are uploaded at once, or incrementally, where data is loaded continuously or at scheduled intervals. A successful load ensures Analysts and decision-makers access to up-to-date, clean data.

Common ETL Tools and Technologies

Several tools facilitate the ETL process, helping organisations automate and streamline data integration. These tools are vital in ensuring efficiency and accuracy in the ETL workflow.

Common ETL tools include:

- Informatica PowerCenter: A widely used ETL tool that offers robust data integration capabilities.

- Talend: An open-source solution that provides various data management features.

- Microsoft SQL Server Integration Services (SSIS): A component of Microsoft SQL Server for data extraction and transformation.

- Apache NiFi: An open-source tool designed for data flow automation and ETL processes.

These tools enable businesses to implement efficient ETL processes, ensuring their data is accurate, accessible, and ready for analysis.

What is ELT?

ELT, which stands for Extract, Load, Transform, is a data integration process that shifts the sequence of operations seen in ETL. In ELT, data is extracted from its source and then loaded into a storage system, such as a data lake or data warehouse, before being transformed.

This approach is particularly well-suited for environments that handle large volumes of data and require flexible data processing.

ELT is a modern data integration methodology that emphasises efficiency and scalability. It allows organisations to pull raw data directly from various sources and load it into a centralised repository without prior transformation.

This methodology takes advantage of the processing power of modern data storage solutions, enabling organisations to perform complex transformations and analytics directly on the data in its native format.

Explanation of the Process

The ELT process consists of three main components, each critical to effective data management and analysis. Understanding these components can help organisations optimise their data integration strategies.

Extract

The first step in the ELT process is Extract, where data is pulled from a wide range of sources. These sources can include traditional databases, cloud applications, APIs, and streaming data. The extraction phase is crucial because it captures the raw data necessary for analysis.

By pulling data from diverse sources, organisations ensure they have a comprehensive view of their information landscape.

Load

After extraction, the next step is Load. In this phase, the extracted data is loaded directly into a storage solution, such as a data lake or a data warehouse. This approach allows organisations to store vast amounts of data without needing immediate transformation.

The flexibility of loading raw data means that organisations can retain all available information, enabling future analysis and transformation as business needs evolve.

Transform

The final step in the ELT process is to transform. Unlike ETL, where transformation occurs before loading, ELT performs data transformation within the destination system after loading. This approach leverages the computational power of modern data warehouses to process and transform the data as needed.

Organizations can perform various transformations, including data cleaning, normalization, and aggregation, allowing for dynamic and on-demand analytics.

Common ELT Tools and Technologies

Several tools and technologies have emerged to facilitate the ELT process, each offering unique features to optimise data integration. Some popular ELT tools include:

- Google BigQuery: A serverless data warehouse that enables efficient data analysis.

- Snowflake: A cloud-based data platform that offers scalable storage and processing capabilities.

- Amazon Redshift: A data warehouse service that allows organizations to run complex queries against large datasets.

- Apache Spark: It is an open-source analytics engine. Apache Spark supports large-scale data processing and transformation.

These tools empower organizations to implement effective ELT processes, ensuring their data is readily available for analysis while maximizing performance and scalability.

Key Differences Between ETL and ELT

Understanding the differences between ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) is crucial for organizations seeking to optimize their data processing workflows. Each approach serves distinct needs and possesses unique characteristics that affect how data is handled. Here, we explore the key differences in data processing sequence, performance, data storage, flexibility, and scalability.

Data Processing Sequence

The sequence of operations distinguishes ETL from ELT significantly. In ETL, the process begins with Extracting data from various sources such as databases, APIs, and flat files. Next, the data undergoes Transformation, which is cleaned, normalised, and organised into a suitable format.

Only after these transformations are completed is the data Loaded into the target data warehouse. This order ensures that only well-structured data enters the data warehouse, ideal for traditional data environments where data quality is paramount.

Conversely, ELT flips this sequence. After Extracting data from various sources, the data is directly Loaded into a data lake or data warehouse in its raw form. Transformation occurs after loading, leveraging the computational power of the destination system.

This shift in sequence allows organizations to work with raw data and perform transformations as needed, making ELT particularly advantageous in environments where quick access to data is essential.

Performance

Performance is another critical factor when comparing ETL and ELT, especially when dealing with large data sets. ETL processes can be resource-intensive and time-consuming, and the transformation step often requires significant processing power and time.

This delay can hinder the timeliness of data availability, which may be detrimental in fast-paced business environments.

In contrast, ELT capitalizes on the capabilities of modern cloud-based data warehouses, which are designed to handle large volumes of data efficiently. Since data is loaded before transformation, organizations can quickly access and analyze raw data without waiting for extensive preprocessing.

This speed makes ELT more suitable for big data applications where rapid data retrieval and real-time analysis are critical.

Data Storage

The approach to data storage differs fundamentally between ETL and ELT. ETL typically loads transformed data into a structured format within a data warehouse. This structured environment ensures that the data is organized and optimized for analytical queries, making it easier for users to derive insights.

However, this process can lead to data silos, where the original raw data is not retained for future analysis.

Conversely, ELT retains the raw data in a data lake or warehouse, enabling organizations to store transformed and untransformed data. This flexibility allows Data Scientists and Analysts to access the original data for analyses or future transformations.

Organizations can respond to new business questions without re-extracting and loading data, as all raw data is readily available.

Flexibility and Scalability

Flexibility and scalability are crucial considerations when choosing between ETL and ELT. ETL processes are often rigid, requiring a predefined schema and a comprehensive transformation plan before data loading. Changes in data requirements may necessitate significant rework and time, making ETL less adaptable to evolving business needs.

In contrast, ELT offers greater flexibility and scalability. With raw data readily accessible, organizations can adapt their data processing strategies to accommodate new analytics demands.

This adaptability enables teams to experiment with different data transformation techniques or explore new data sources without the constraints of a predefined pipeline. As businesses grow and their data needs evolve, ELT can scale more effectively to meet these changes.

Advantages and Disadvantages of ETL and ELT

When deciding between ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform), understanding each approach’s advantages and disadvantages is essential. Both methods have unique benefits and drawbacks, which can significantly impact your data processing strategy.

ETL: Advantages and Disadvantages

ETL has been a foundational approach to data integration for many organizations. Understanding its advantages can help businesses recognize its value while being aware of its disadvantages can guide them in making better decisions regarding data workflows.

Advantages:

- Data Quality: ETL emphasizes data transformation before loading, ensuring high data quality. It allows for thorough data cleansing and validation, making it suitable for analytical tasks requiring accurate information.

- Structured Data: ETL maintains a structured format by transforming data during the extraction process. This organization aids in consistency and improves accessibility for reporting and analytics.

- Performance: For smaller data volumes, ETL often performs well, as the transformation process is completed before loading, leading to faster query responses within the data warehouse.

Disadvantages:

- Time-Consuming: The ETL process can be lengthy, as data transformation occurs before loading. This delay can slow down the availability of data for analysis.

- Less Flexible: ETL workflows can be rigid. Changes in data sources or requirements may necessitate significant modifications to the ETL pipeline, leading to increased maintenance efforts and costs.

ELT: Advantages and Disadvantages

ELT is gaining traction, especially with the rise of big data and cloud computing. Its modern approach to data integration offers distinct advantages, but it also comes with challenges that organizations must address.

Advantages:

- Speed: ELT processes can handle large volumes of data quickly. By loading raw data directly into the data storage system, Analysts can access data faster for querying and analysis.

- Flexibility: ELT allows for greater flexibility in handling various data types and structures. Analysts can transform data on demand, adapting to changing business needs and requirements without overhauling the entire pipeline.

- Cost-Effective: With the rise of cloud storage solutions, ELT can be more cost-effective. It leverages the computing power of cloud platforms, allowing organizations to scale resources as needed.

Disadvantages:

- Complexity: The ELT approach can introduce complexity in managing and transforming large datasets. Organizations need robust data management tools to ensure efficient data processing and transformation within the storage system.

- Potential Data Quality Issues: Since ELT loads raw data before transformation, there is a risk of poor data quality. Inconsistent or erroneous data may enter the analysis stage, leading to misleading insights if not properly managed.

By weighing these advantages and disadvantages, organizations can decide whether ETL or ELT best suits their data processing needs. Understanding these factors is crucial in optimizing data workflows and achieving effective data-driven insights.

Bottom Line

Understanding the differences between ETL and ELT is essential for organizations aiming to optimize their data management processes. Both methods offer unique advantages and disadvantages, making evaluating your specific data needs crucial. Businesses can enhance data quality, flexibility, and performance by selecting the right approach to driving informed decision-making.

Frequently Asked Questions

What is the Primary Difference Between ETL and ELT?

The primary difference between ETL and ELT lies in their processing sequence. ETL transforms data before loading it into a data warehouse, ensuring high data quality. In contrast, ELT loads raw data directly into storage and transforms it later, allowing for quicker access and flexible data analysis.

When Should I Choose ETL Over ELT?

Choose ETL when data quality is paramount, especially in environments requiring extensive data cleansing and transformation before analysis. It is ideal for smaller data volumes and structured data environments. ETL ensures that only reliable, well-organized data enters the data warehouse, supporting accurate decision-making and reporting.

What are the Advantages of Using ELT for Data Processing?

ELT offers several advantages, including speed, flexibility, and cost-effectiveness. By loading raw data into storage, Analysts can quickly access it for analysis without waiting for transformations. This adaptability allows organizations to respond to evolving data needs and leverage cloud storage solutions to manage costs effectively.