Transfer Learning in Deep Learning: A Brief Overview

Collecting large volumes of data, filtering it and then interpreting is a challenging task. What if we say that you have the option of using a pre-trained model that works as a framework for data training? Yes, Transfer Learning is the answer to it.

What is Transfer Learning?

Transfer Learning is a technique in Machine Learning where a model is pre-trained on a large and general task. Since this technology operates in transferring weights from AI models, it eventually makes the training process for newer models faster and easier. Thus, it saves time that otherwise goes in training a model from scratch.

Simply put, it utilizes the knowledge gained from the pre-trained model and applies it to the new task. Thus it reduces the amount of data and computational need. Transfer Learning has various applications like computer vision, NLP, recommendation systems, and robotics. Eventually, making it a powerful and efficient tool in Machine Learning.

Examples of Transfer Learning in Deep Learning include:

- Using a pre-trained image classification network for a new image classification task with a similar dataset.

- Fine-tuning a pre-trained language model for text classification on a new text dataset.

- Application of a pre-trained object detection network, like the segmentation on a new dataset.

These examples demonstrate how Transfer Learning can solve problems quickly and effectively with limited data and computational resources.

What Happens At The Core?

Understanding what happens at the core is significant here. The process involves the use of pre-trained models to construct a new model with similar functionality but different parameters or data sources. Eventually, the newer model is equipped to resolve problems without any flaws. The objective behind Transfer Learning is to share certain features across tasks. This is executed by using some of the standard features of the pre-existing model and building a new one without starting from scratch. This eventually saves the developer’s time and ensures the creation of a flawless and accurate model.

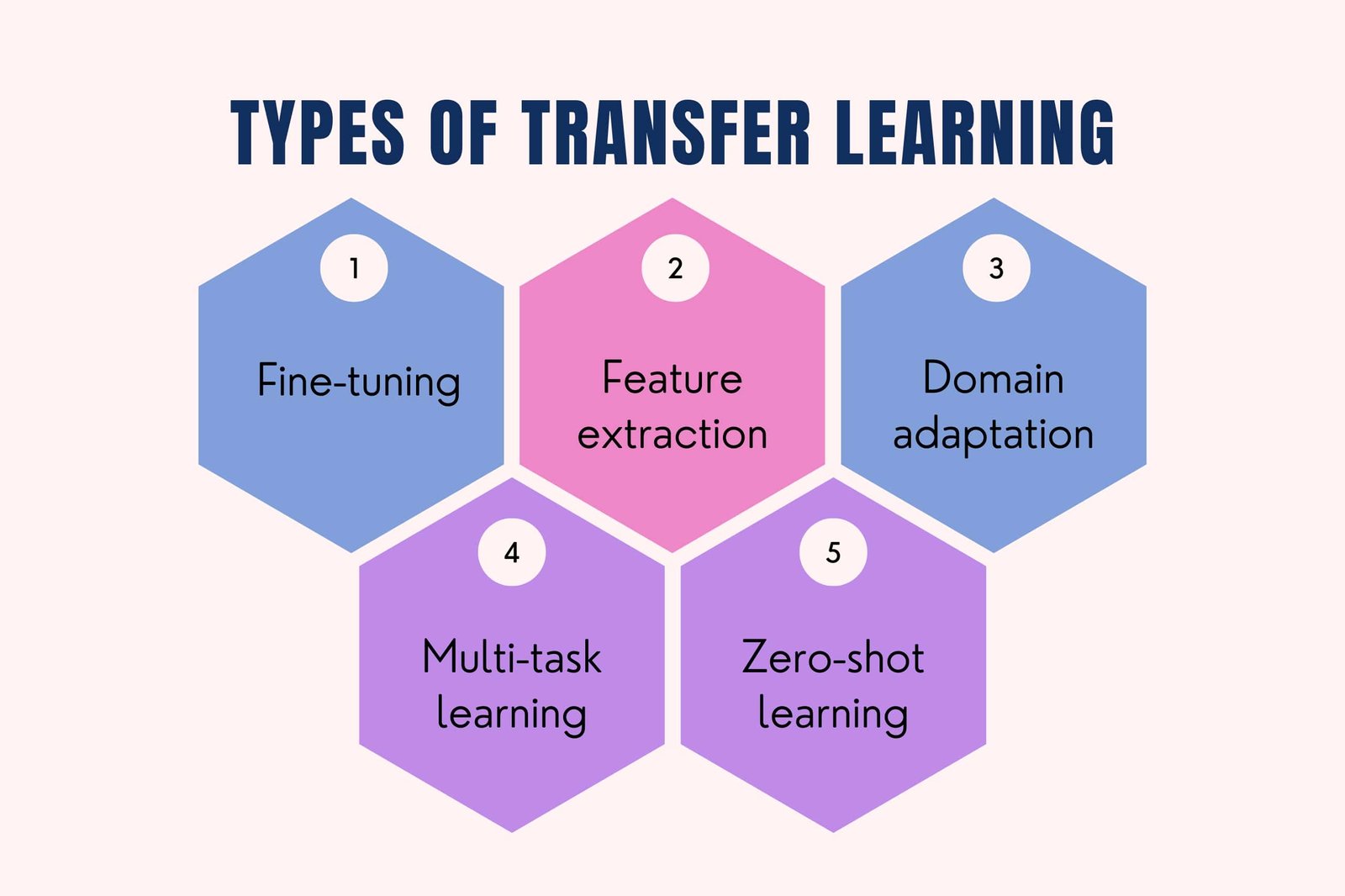

Types of Transfer Learning in Deep Learning

Fine-tuning: It involves the use of pre-trained models as the underlying framework and then training on a new task with a lower learning rate.

Feature extraction: Here, the developer uses pre-trained models to extract features from new data. Then they use the best features to train a new classifier.

Domain adaptation: It works by adapting a pre-trained model to a new domain by fine-tuning it on the target domain data.

Multi-task learning: Here, the focus is to train a single model on multiple tasks to improve performance on all tasks.

Zero-shot learning: It involves the use of pre-trained models to make predictions on new classes without any training data for those classes.

Tabular representation of difference between transfer learning and machine learning

It’s important to note that transfer learning is a subset of machine learning and leverages techniques from machine learning to achieve its goals.

| Transfer Learning | Machine Learning |

| This technology focuses on reusing pre-trained models for related tasks | It is a wider domain that encompasses different algorithms and techniques for learning from data |

| It requires fewer labeled examples for training | Here the developer needs large volumes of labeled data to train models |

| It ensures faster results and enhanced performance. | The process is a bit slower and hence its implementation needs more time. |

| Limited to tasks that are related to the source task | Can be applied to a wide range of tasks |

Why is Transfer Learning Gaining Popularity?

Transfer Learning could be a revolutionary addition to the Machine Learning domain. It helps in overcoming some of the drawbacks and bottlenecks of Machine Learning:

Data scarcity: Transfer Learning technology doesn’t require reliance on larger data sets. This technology allows models to be fine-tuned using a limited amount of data. It is especially useful in applications where labelled datasets are scarce or expensive to acquire, such as medical imaging, autonomous driving, and Natural Language Processing (NLP).

Computational cost: Transfer Learning works on free trade network, thereby reducing the dependency on creating a model from scratch. Thus it is computationally lesser expensive.

Long training time: When the developer starts training a model from scratch, it may take days or weeks. This eventually increases the time. However, in Transfer Learning, the computational time dramatically comes down because it involves the use of pre-trained models. Thus, making it a time-saving process.

Domain adaptation: Transfer Learning enables models to be adapted to new domains by fine-tuning pre-trained models on task-specific data.

Transfer Learning Examples

Convolutional Neural Networks (CNNs)- This type of Deep Learning network finds application in image classification, object detection and segmentation tasks. Using pre-trained weights from existing models such as VGG16 or Res Net50, developers can quickly construct new networks fine-tuned towards specific tasks with improved accuracy and faster training times than would otherwise be possible.

Recurrent Neural Networks (RNNs)-It uses Natural Language Processing applications such as text classification, sentiment analysis and machine translation. Using the knowledge learned from large datasets of previously labelled texts, the developers can quickly train new RNNs on smaller datasets with higher accuracy than would otherwise be possible. This makes it easier to create custom NLP systems that are more accurate and require fewer data than traditional methods.

Robotics – Using Transfer Learning, the developers can improvise robotic tasks like navigation, motion control, and manipulation. This is executed with minimal modification. They can learn to navigate more accurately when facing unfamiliar terrain or objects.

Healthcare- Transfer learning has been used to analyze medical images such as CT scans and X-rays, where pre-trained models on large image datasets can be fine-tuned for specific tasks such as disease diagnosis.

The Future is Here !!!

In conclusion, Transfer Learning is an effective technique. It has the potential to improve the accuracy and speed of development for many tasks. By leveraging pre-trained models or features from existing datasets, developers can quickly construct more accurate models without having to start from scratch each time.

In the future, technologies like Transfer Learning will find widespread applications across the breadth of the industry. This clearly highlights the significance of data sets and their interpretation in creating an AI model. It will eventually lead to the rise in the demand of Data Science professionals. So if you are looking for a progressive career opportunity, this is the right time to enrol for the Data Science Certification Program, where you will be a part of a comprehensive learning journey. This will help you develop strong skills in data science, programming languages, Artificial Intelligence and Machine Learning. All this will eventually help in boosting up your career growth. So, take a step ahead and join Pickl.AI and start your learning journey today.

![What is Transfer Learning in Deep Learning? [Examples & Application]](https://www.pickl.ai/blog/wp-content/uploads/2023/02/Transfer-learning.jpg)