Summary: Hyperparameters are external parameters set before training a Machine Learning model, such as learning rate, number of trees, or batch size. Unlike model parameters learned during training, hyperparameters influence the training process and model complexity. Effective hyperparameter tuning is crucial for optimizing model performance and avoiding overfitting or underfitting.

Introduction

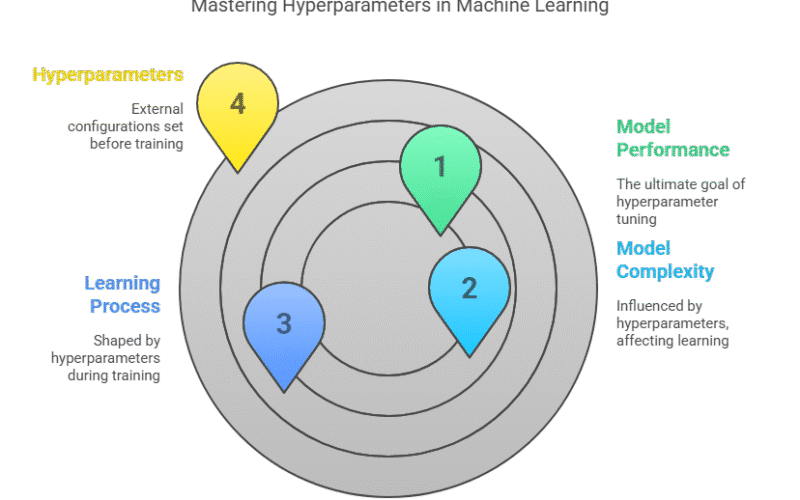

In the world of Machine Learning, hyper parameters in Machine Learning are the external configurations you set before training your model. They shape how the model learns, how complex it becomes, and how well it performs. Mastering hyperparameters is like mastering the art of cooking: it’s the secret sauce that can take your models from average to exceptional.

Key Takeaways

- Hyperparameters guide how a model learns from data during training.

- Proper tuning improves accuracy, robustness, and generalization of models.

- Different algorithms require different sets of hyperparameters to tune.

- Hyperparameter tuning balances model bias and variance effectively.

- Automated tools simplify and accelerate the hyperparameter optimization process.

What Are Hyperparameters in Machine Learning?

Hyperparameters in Machine Learning are values or settings that you specify before the learning process begins. They are not learned from the data; instead, they guide the model’s training process and architecture. Think of them as the dials and switches on a Machine Learning “oven”—they control the cooking process, not the ingredients themselves.

Key Points:

- Set Before Training: Hyperparameters are chosen before the model sees any data.

- Not Learned: Unlike parameters (like weights in a neural network), hyperparameters are not adjusted by the learning algorithm.

- Affect Training and Model Structure: They determine how the model learns and its complexity.

Why Are Hyperparameters Important?

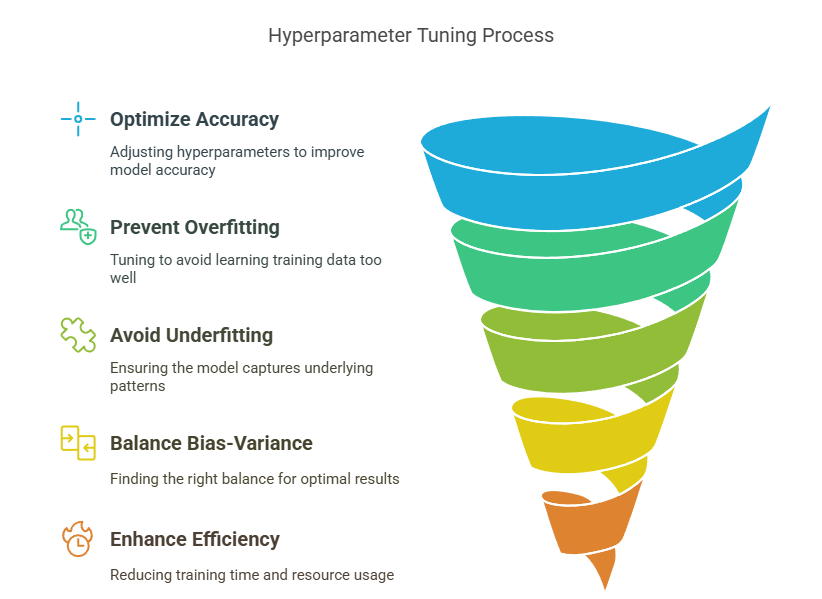

Hyperparameters are critically important in Machine Learning because they directly control the training process and significantly influence a model’s performance, efficiency, and ability to generalize to new, unseen data.

Setting appropriate hyperparameters can help optimize accuracy, prevent overfitting (where the model learns the training data too well and fails to generalize), and avoid underfitting (where the model is too simple to capture underlying patterns).

Hyperparameters also determine key aspects such as model complexity, learning speed, and regularization, allowing practitioners to balance the trade-off between bias and variance for optimal results.

Moreover, they impact computational efficiency, as well-chosen hyperparameters can reduce training time and resource usage. Ultimately, hyperparameters act as the “knobs and levers” that must be carefully tuned to unlock a model’s full potential and ensure it performs well on real-world data.

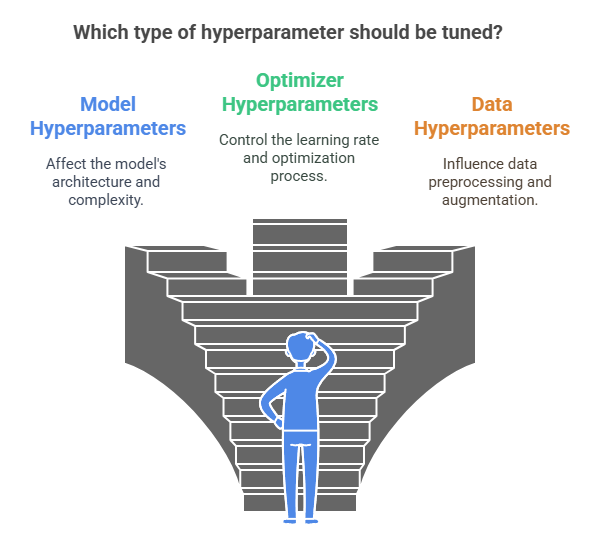

Types of Hyperparameters

There are several types of hyperparameters in Machine Learning, each affecting different aspects of the model and training process. Let’s break them down:

Model Hyperparameters

These define the structure or architecture of your model.

- Examples:

- Number of layers in a neural network

- Number of trees in a random forest

- Maximum depth of a decision tree

Optimizer Hyperparameters

These control how the model learns from data.

- Examples:

- Learning rate

- Batch size

- Momentum

- Optimizer type (SGD, Adam, RMSprop, etc.)

Data Hyperparameters

These influence how data is presented to the model during training.

- Examples:

- Mini-batch size

- Number of epochs

- Data augmentation settings

Regularization Hyperparameters

These help prevent overfitting by adding constraints or penalties.

- Examples:

- Regularization strength (L1, L2 penalties)

- Dropout rate in neural networks

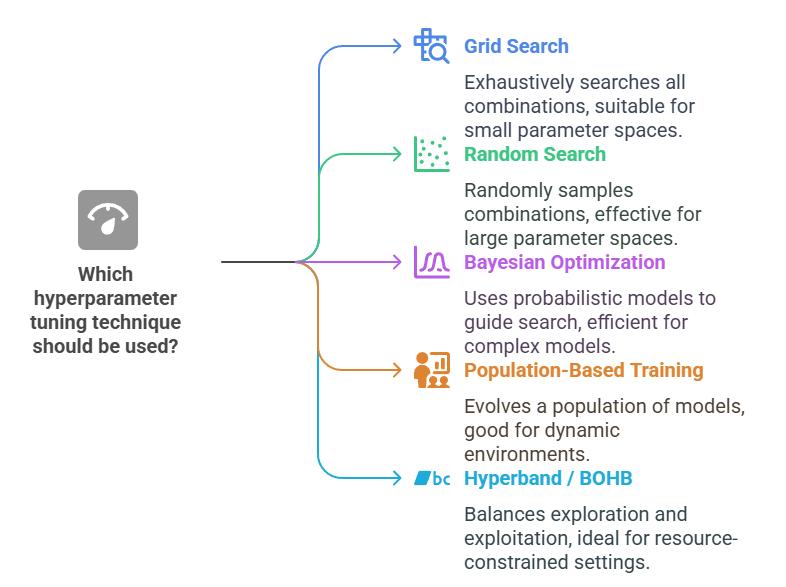

Hyperparameter Tuning Techniques

Hyperparameter tuning is the process of systematically searching for the optimal combination of hyperparameters to maximize a Machine Learning model’s performance. Several techniques—ranging from simple to advanced—are commonly used in practice.

Grid Search

Exhaustively evaluates all possible combinations from a predefined set of hyperparameter values.

- Strengths: Simple to implement; guarantees finding the optimal combination within the search space.

- Limitations: Computationally expensive; scales poorly as the number of hyperparameters or values increases.

Random Search

Randomly samples combinations of hyperparameters from the search space.

- Strengths: More efficient than grid search, especially for high-dimensional spaces.

- Limitations: May miss optimal regions; still requires many evaluations to find good parameters.

Bayesian Optimization

Uses probabilistic models to predict promising hyperparameter settings based on previous results.

- Strengths: Efficient; balances exploration and exploitation to find better hyperparameters faster.

- Limitations: More complex to implement; computational overhead for updating the model during tuning.

Population-Based Training

Runs multiple models in parallel, periodically updating them with the best-performing hyperparameters.

- Strengths: Adapts hyperparameters dynamically during training; suitable for deep learning models.

- Limitations: Resource-intensive; requires a more complex setup and infrastructure.

Hyperband / BOHB (Bayesian Optimization with Hyperband)

Allocates resources adaptively by quickly eliminating poor configurations and focusing on promising ones.

- Strengths: Highly efficient for large hyperparameter spaces; reduces wasted computation.

- Limitations: More complex to implement; may require tuning of its own parameters.

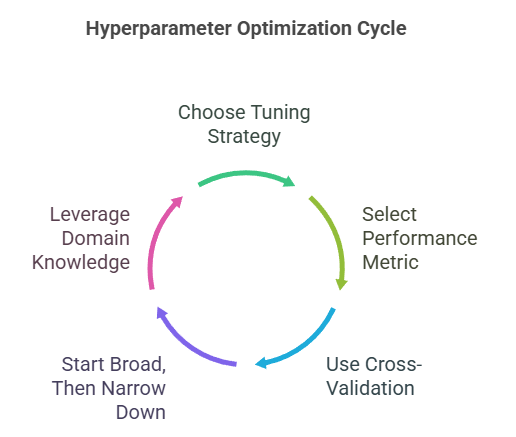

Best Practices for Hyperparameter Tuning

By following these best practices, you can systematically and efficiently optimize your Machine Learning models for better performance and generalization.

Choose an Appropriate Tuning Strategy

For large training jobs, consider advanced techniques like Hyperband, which uses early stopping to quickly eliminate underperforming configurations and reallocates resources to promising ones.

For smaller jobs or when parallelism is needed, random search or Bayesian optimization are effective. Random search allows for many parallel jobs, while Bayesian optimization uses information from previous runs to make smarter decisions but is less scalable for massive parallelization.

Use grid search when reproducibility and transparency are priorities, as it systematically explores every combination and yields consistent results when repeated with the same settings.

Select the Right Performance Metric

Always define and use a performance metric (e.g., accuracy, F1 score, AUC) that aligns with your business or research objective. The metric guides the tuning process toward what matters most for your problem.

Use Cross-Validation

Employ cross-validation during tuning to avoid overfitting and ensure the model generalizes well to new data. This provides a more robust evaluation of each hyperparameter configuration.

Start Broad, Then Narrow Down

Begin with a wide range of hyperparameter values to explore the search space broadly. After identifying promising regions, narrow the search to fine-tune around the best configurations.

Leverage Domain Knowledge

Use prior experience or literature to set sensible bounds for hyperparameters. This can significantly reduce unnecessary computation and focus the search on likely good regions

Tools & Libraries for Hyperparameter Optimization

These libraries cover a wide range of needs, from simple grid searches to advanced, distributed, and automated optimization strategies—making them invaluable tools for improving model performance efficiently.

Optuna

Optuna is a modern, lightweight framework for automatic hyperparameter optimization in Machine Learning. It features a dynamic, define-by-run API, supports both single and multi-objective optimization, and enables efficient, parallel, and distributed searches across large parameter spaces.

Key Features

- Dynamic, Pythonic search space definition with support for conditionals and loops.

- Efficient pruning of unpromising trials using learning curves to save computation.

- Scalable parallel and distributed optimization with built-in visualization dashboard.

Hyperopt

Hyperopt is a flexible Python library for hyperparameter optimization, supporting random search and Bayesian optimization via the Tree of Parzen Estimators (TPE). It handles real-valued, discrete, and conditional spaces, making it suitable for complex and large-scale optimization tasks.

Key Features

- Supports both random search and advanced Bayesian optimization (TPE).

- Handles complex search spaces, including conditional and hierarchical parameters.

- Integrates easily with Keras, Scikit-learn, and other ML frameworks.

Ray Tune

Ray Tune is a scalable hyperparameter tuning library designed for distributed computing. It supports a variety of search algorithms, including Bayesian optimization and Hyperband, and can run parallel trials across multiple nodes for efficient, production-level tuning.

Key Features

- Distributed and parallel execution for large-scale experiments.

- Supports advanced search algorithms like Bayesian optimization and Hyperband.

- Seamless integration with TensorFlow, PyTorch, and other major ML libraries.

Scikit-Optimize (skopt)

Scikit-Optimize is a simple and efficient library for sequential model-based optimization (Bayesian optimization). Built on top of Scikit-learn, it is especially useful for tuning Scikit-learn models with easy-to-use interfaces.

Key Features

- Implements Bayesian optimization for efficient hyperparameter search.

- Simple integration with Scikit-learn pipelines and estimators.

- Lightweight and fast, suitable for small to medium-sized search spaces.

Scikit-learn

Scikit-learn offers classic tools for hyperparameter tuning, such as GridSearchCV and RandomizedSearchCV. It is ideal for straightforward or small-scale optimization tasks and integrates seamlessly with its own ML models.

Key Features

- Provides grid search and randomized search for hyperparameter tuning.

- Easy integration with Scikit-learn estimators and pipelines.

- Well-documented and widely adopted in the ML community.

Auto-Sklearn

Auto-Sklearn is an automated Machine Learning (AutoML) library that includes hyperparameter optimization as part of its pipeline. It can serve as a drop-in replacement for Scikit-learn estimators, automating both model selection and tuning.

Key Features

- Automated model selection and hyperparameter optimization.

- Drop-in compatibility with Scikit-learn API.

- Built-in ensemble construction for improved performance.

KerasTuner

KerasTuner is specialized for hyperparameter optimization of deep learning models built with Keras and TensorFlow. It supports multiple search algorithms, including Bayesian optimization, Hyperband, and random search.

Key Features

- Designed specifically for Keras and TensorFlow models.

- Supports Bayesian Optimization, Hyperband, and Random Search.

- User-friendly API for defining and running tuning experiments.

Conclusion

By understanding and mastering hyper parameters in Machine Learning, you can elevate your models and ensure they deliver the best possible results, no matter the task or dataset.

Hyper parameters in Machine Learning are the critical settings that shape how your model learns and performs. The process of hyperparameter tuning—experimenting with different combinations—can unlock the full potential of your models, leading to better performance, efficiency, and generalization.

Understanding the types of hyperparameters and knowing how to tune them is essential for building robust, accurate, and efficient Machine Learning solutions. With practice and the right tools, you can master the art of hyperparameter tuning and consistently deliver high-performing models.

Frequently Asked Questions

What Are Hyperparameters in Machine Learning and How Are They Different from Parameters?

Hyperparameters are external settings chosen before training, such as learning rate or number of layers, which control the training process. Parameters, like weights and biases, are learned by the model from the data during training and directly impact predictions.

Why is Hyperparameter Tuning Important in Machine Learning?

Hyperparameter tuning is crucial because it helps find the best configuration for a model, improving its accuracy, efficiency, and ability to generalize to new data. Without proper tuning, models may underfit or overfit, leading to poor performance.

What are Some Common Techniques for Hyperparameter Tuning?

Common techniques include grid search, random search, and Bayesian optimization. These methods systematically or randomly explore combinations of hyperparameters to identify the best-performing model, balancing performance and computational efficiency.